About Me

I am a Doctoral student at York University under the supervision of Dr. Marcus Brubaker and Dr. Kosta Derpanis. I am interested in computer vision and machine learning. I am interested in generative modeling, view synthesis, and scene understanding problems.

News

- Jul 1, 2024 Paper accepted to ECCV 2024: PolyOculus: Simultaneous Multi-view Image-based Novel View Synthesis

- Jul 13, 2023 Paper accepted to ICCV 2023: Long-Term Photometric Consistent Novel View Synthesis with Diffusion Models

- Oct 31, 2022 Awarded the NSERC CGS-D Scholarship

- Mar 31, 2021 Accepted invitation to the Vector Institute Postgraduate Affiliate Program

- Sep 25, 2020 Paper accepted to NeurIPS 2020: Wavelet Flow: Fast Training of High Resolution Normalizing Flows

- Aug 6, 2020 Successfully defended Master's thesis

- Apr 29, 2020 Accepted VISTA Doctoral scholarship at York University

- Mar 11, 2020 Ended internship at Borealis AI

- Mar 4, 2020 Accepted Offer of Admission to York University Computer Science PhD. Program

- Nov 12, 2019 Started internship at Borealis AI

- Oct 30, 2019 Accepted MITACS Accelerate award in partnership with Borealis AI

- May 2, 2018 Accepted VISTA Masters scholarship at York University

- Apr 1, 2018 Accepted the Ontario Graduate Scholarship (OGS)

Projects

PolyOculus: Simultaneous Multi-view Image-based Novel View Synthesis

Jul 1, 2024

This paper considers the problem of generative novel view synthesis (GNVS), generating novel, plausible views of a scene given a limited number of known views. Here, we propose a set-based generative model that can simultaneously generate multiple, self-consistent new views, conditioned on any number of views. Our approach is not limited to generating a single image at a time and can condition on a variable number of views. As a result, when generating a large number of views, our method is not restricted to a low-order autoregressive generation approach and is better able to maintain generated image quality over large sets of images. We evaluate our model on standard NVS datasets and show that it outperforms the state-of-the-art image-based GNVS baselines. Further, we show that the model is capable of generating sets of views that have no natural sequential ordering, like loops and binocular trajectories, and significantly outperforms other methods on such tasks.

Long-Term Photometric Consistent Novel View Synthesis with Diffusion Models

Apr 21, 2023

Novel view synthesis from a single input image is a challenging task, where the goal is to generate a new view of a scene from a desired camera pose that may be separated by a large motion. The highly uncertain nature of this synthesis task due to unobserved elements within the scene (i.e., occlusion) and outside the field-of-view makes the use of generative models appealing to capture the variety of possible outputs. In this paper, we propose a novel generative model which is capable of producing a sequence of photorealistic images consistent with a specified sequence and a single starting image. Our approach is centred on an autoregressive conditional diffusion-based model capable of interpolating visible scene elements and extrapolating unobserved regions in a view and geometry consistent manner. Conditioning is limited to an image capturing a single camera view and the (relative) pose of the new camera view. To measure the consistency over a sequence of generated views, we introduce a new metric, the thresholded symmetric epipolar distance (TSED), to measure the number of consistent frame pairs in a sequence. While previous methods have been shown to produce high quality images and consistent semantics across pairs of views, we show empirically with our metric that they are often in consistent with the desired camera poses. In contrast, we demonstrate that our method produces both photorealistic and view-consistent imagery.

Wavelet Flow: Fast Training of High Resolution Normalizing Flows

Sep 25, 2020

Normalizing flows are a class of probabilistic generative models which allow for both fast density computation and efficient sampling and are effective at modelling complex distributions like images. A drawback among current methods is their significant training cost, sometimes requiring months of GPU training time toachieve state-of-the-art results. This paper introduces Wavelet Flow, a multi-scale, normalizing flow architecture based on wavelets. A Wavelet Flow has an explicit representation of signal scale that inherently includes models of lower resolution signals and conditional generation of higher resolution signals, i.e., super resolution. A major advantage of Wavelet Flow is the ability to construct generative models for high resolution data (e.g., 1024×1024 images) that are impractical with previous models. Furthermore, Wavelet Flow is competitive with previous normalizing flows in terms of bits per dimension on standard (low resolution) benchmarks while being up to 15× faster to train.

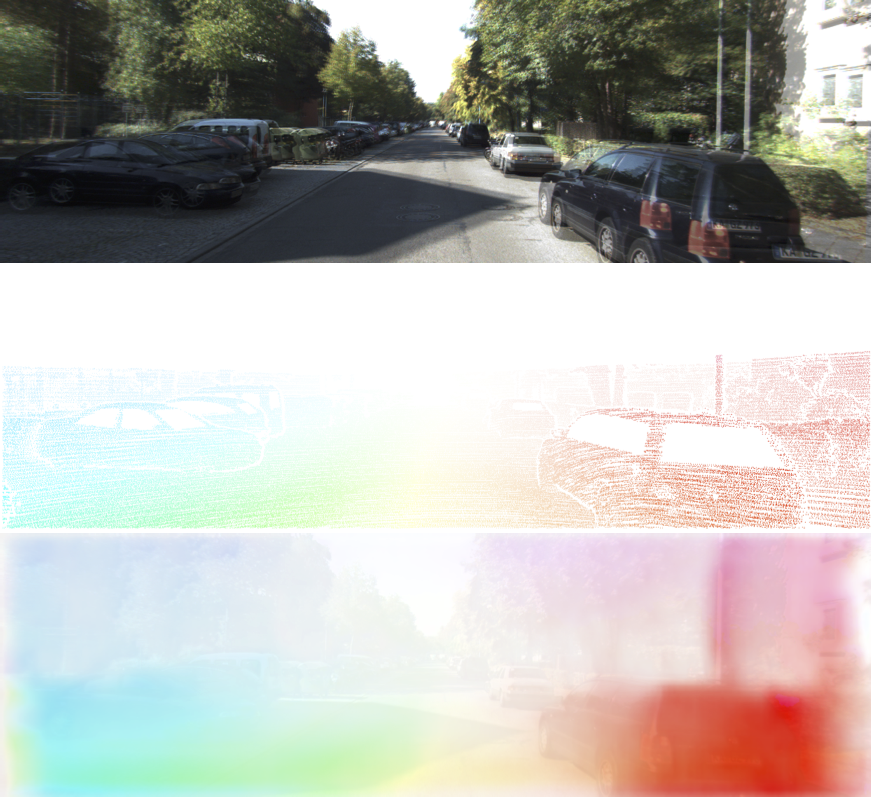

Back to Basics: Unsupervised Learning of Optical Flow via Brightness Constancy and Motion Smoothness

Aug 29, 2016

Recently, convolutional networks (convnets) have proven useful for predicting optical flow. Much of this success is predicated on the availability of large datasets that require expensive and involved data acquisition and laborious labeling. To bypass these challenges, we propose an unsupervised approach (i.e., without leveraging groundtruth flow) to train a convnet end-to-end for predicting optical flow between two images. We use a loss function that combines a data term that measures photometric constancy over time with a spatial term that models the expected variation of flow across the image. Together these losses form a proxy measure for losses based on the groundtruth flow. Empirically, we show that a strong convnet baseline trained with the proposed unsupervised approach outperforms the same network trained with supervision on the KITTI dataset.